The Kafka Upgrade: Essential Insights for Leaving Your Legacy ESB Behind

Have you ever looked at your legacy Enterprise Service Bus (ESB) and felt like you were driving a horse-drawn carriage on a modern highway? Platforms like TIBCO BusinessWorks, IBM WebSphere, and Oracle Service Bus once represented the cutting edge of enterprise integration. For years, they stitched together critical business processes.

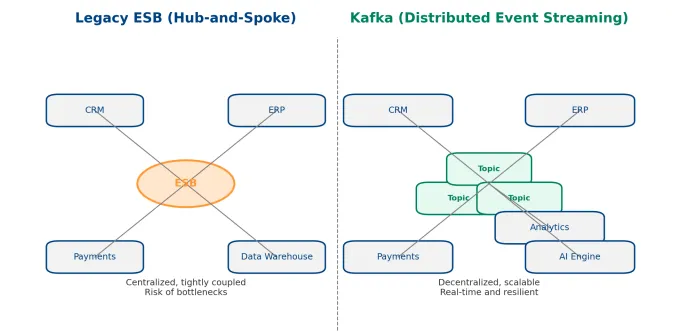

But today, the world moves at digital-speed. The very features that once made ESBs powerful—their centralized control, orchestration, and synchronous communication— have become bottlenecks. They slow innovation, limit scalability, and introduce fragility at precisely the time when enterprises need agility and resilience.

Enter Apache Kafka: a distributed event streaming platform built for real-time data flows, decentralized architectures, and scale without limits. Migrating to Kafka isn’t simply replacing old pipes with new ones. It’s about rethinking how your business communicates, scales, and innovates. Done right, it’s the difference between creaking along the highway and gliding in a high-speed train.

Step 1: Understand Your Legacy System’s DNA

Every modernization journey starts with understanding what you’re leaving behind.

Too many organizations rush to adopt the new without unpacking the quirks, shortcuts, and hidden dependencies of their existing ESB.

Think of it as looking under the hood of that horse-drawn carriage — you need to know where the wheels wobble before you can design a car.

- Message Flows & Routing: Legacy ESBs thrive on routing logic. Map every path — sources, destinations, timing, and volume.

- Protocols & Transformations: ESBs are polyglots. They translate HTTP to JMS, SOAP to JSON, XML to flat files.

- Service Orchestration: ESBs choreograph everything from one central stage. Kafka flips the model into choreography.

- Adapters & Connectors: In Kafka, Kafka Connect fills that role.

- Error Handling & Monitoring: Legacy systems assume the “happy path.” Kafka requires retries, DLTs, exception flows.

- Security & Logging: Outdated security won’t cut it. Migration means modern authentication and observability.

Business takeaway: This deep assessment prevents surprises, helps scope effort, and uncovers hidden costs.

Step 2: Crafting the New Blueprint

With the past mapped out, it’s time to imagine the future.

A Kafka architecture isn’t just plumbing — it’s your new digital nervous system.

- Data as a Product: Treat streams as reusable assets. Define schemas (Avro, Protobuf) with clear rules.

- Ordering & Parallelism: ESBs guarantee order; Kafka lets you choose between strict order and parallelism.

- Kafka Headers: Add metadata for context and traceability.

- Bridging Incompatibility: Not all systems speak Kafka — use wrappers, APIs, or translators for legacy systems.

Business takeaway: A thoughtful design avoids costly rewrites later.

Step 3: Building and Operating with Excellence

Producers: The Source of Truth

Producers are data gatekeepers: capture every event and stream to Kafka.

- Keep them close to source systems.

- Avoid heavy logic; save processing for consumers.

- Use retries and error topics so no event is lost.

Think of producers as reporters on the ground — they observe, record, and deliver.

Consumers: The Data Processors

Consumers transform raw feeds into useful outputs.

- Apply filtering, enrichment, or transformation.

- Handle errors gracefully, separating “happy path” from problem cases.

- Use retries, error queues, and Dead-Letter Topics.

- Consider Kafka Streams or Flink for complex workflows.

Operational Foundations

- Standardization: Provide reusable templates.

- Observability: Monitor consumer lag, broker health, connectors.

- Continuous Modernization: Keep libraries current.

- Phased Rollout: Start with non-critical flows, then scale.

Business takeaway: This disciplined approach keeps the system reliable, minimizes migration risk, and accelerates value.

The Payoff

So, why go through all this effort? Because the rewards are transformative:

- Agility: Launch new services in weeks, not months.

- Scalability: Scale horizontally without hitting ESB bottlenecks.

- Resilience: Eliminate single points of failure with decentralized design.

- Insight: Unlock real-time analytics, AI integration, and smarter business decisions.

Migrating from a legacy ESB to Kafka isn’t just a technical upgrade — it’s a strategic leap.

Readiness Checklist: 5 Questions Before You Migrate

- Have we mapped all critical ESB message flows and dependencies?

- Do we have a schema governance strategy (e.g., Avro, Protobuf)?

- Are our teams prepared for decentralized, event-driven design?

- Can we implement robust monitoring and observability?

- Do we have a non-critical pilot use case to start with?

If you answered “yes” to most, you’re ready for a Kafka migration strategy.

Visual Blueprint: ESB vs Kafka

Disclaimer: Apache Kafka is a trademark of the Apache Software Foundation.

Other product names mentioned (e.g., TIBCO, IBM WebSphere, Oracle Service Bus) are the trademarks of their respective owners.

References are for descriptive and comparative purposes only.

Ready to Modernize Your Integration Landscape?

Whether you’re just assessing your legacy ESB or planning a full migration, our team can help you design a future-ready Kafka architecture.

Contact us to schedule a free readiness assessment.

Learn From the experts

Starting point. Together, we Assess your situation to identify opportunities, limitations, risks and critical pain points.

Ready to ditch TIBCO

for Confluent?